Common SEO Issues

Meta title

Your page’s meta title is an HTML tag that defines the title of your page. This tag displays your page title in search engine results, at the top of a user’s browser, and also when your page is bookmarked in a list of favorites. A concise, descriptive title tag that accurately reflects your page’s topic is important for ranking well in search engines.

<title>Not a Meta Tag, but required anyway </title>

Meta Description

You should include this tag in order to provide a brief description of your page which can be used by search engines. Well-written and inviting meta descriptions may also help click-through rates to your site in search engine results.

Your page’s meta description is an HTML tag that is intended to provide a short and accurate summary of your page. Search engines use meta descriptions to help identify the a page’s topic – they may also use meta descriptions by displaying them directly in search engine results. Accurate and inviting meta descriptions can help boost both your search engine rankings and a user’s likelihood of clicking through to your page.

<meta name="description" content="Awesome Description Here">

<h1> Headings

H1 headings are HTML tags that are not visible to users, but can help clarify the overall theme or purpose of your page to search engines. The H1 tag represents the most important heading on your page, e.g., the title of the page or blog post.<h1> Awesome Title </h1>

<h2> Headings

H2 headings are HTML tags that are not visible to users, but can help clarify the overall theme or purpose of your page to search engines. The H2 tag represents the second most important headings on your page, e.g., the subheadings.

<h1> Awesome Subtitle </h1>

Robots.txt

Check if your website is using a robots.txt file. When search engine robots crawl a website, they typically first access a site’s robots.txt file. Robots.txt tells Googlebot and other crawlers what is and is not allowed to be crawled on your site.Your site lacks a “robots.txt” file. This file can protect private content from appearing online, save bandwidth, and lower load time on your server. A missing “robots.txt” file also generates additional errors in your apache log whenever robots request one.

User-agent: * Disallow:

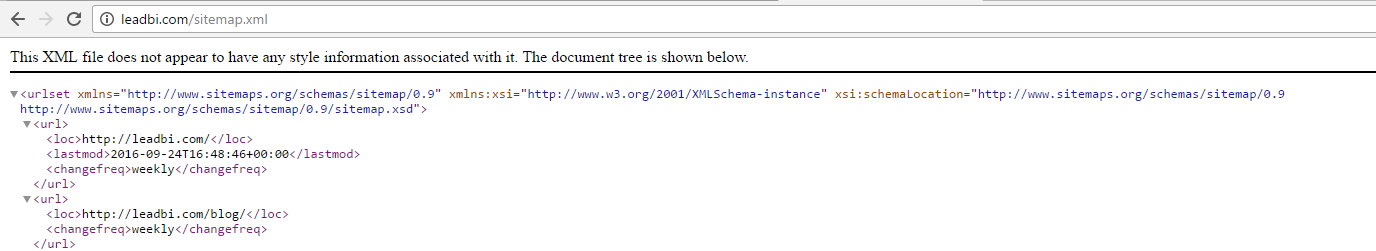

Sitemap

Check if the website has a sitemap. A sitemap is important as it lists all the web pages of the site and let search engine crawlers to crawl the website more intelligently. A sitemap also provides valuable metadata for each webpage.Your site lacks a sitemap file. Sitemaps can help robots index your content more thoroughly and quickly. Read more on Google’s guidelines for implementing the sitemap protocol.

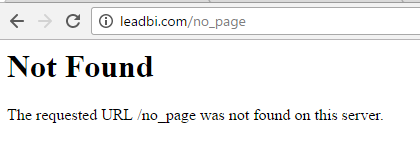

Broken Links

Check if your website has any broken or dead links. This tool scans your website to locate both broken internal links (pointing within your website) and external broken links (pointing outside of your website). Broken links negatively impact the user experience and damage your website’s overall ranking with search engines.

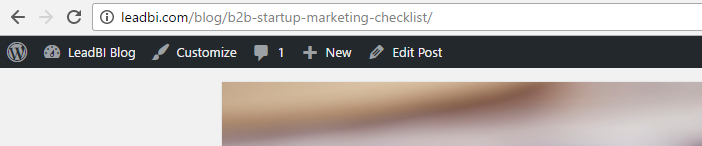

SEO Friendly URL

Check if your web page URLs are SEO friendly. In order for links to be SEO friendly, they should contain keywords relevant to the page’s topic, and contain no spaces, underscores or other characters. You should avoid the use of parameters when possible, as they make URLs less inviting for users to click or share.

Google’s suggestions for URL structure specify using hyphens or dashes (-) rather than underscores (_). Unlike underscores, Google treats hyphens as separators between words in a URL.

Image Alt Text

Check if images on your webpage are using alt attributes. If an image cannot be displayed (e.g., due to broken image source, slow internet connection, etc), the alt attribute provides alternative information. Using relevant keywords and text in the alt attribute can help both users and search engines better interpret the subject of an image.

<img src="http://leadbi.com/blog/wp-content/uploads/2016/10/seo_friendly_url.png" alt="SEO Friendly URL"/>

Inline CSS

Check your webpage HTML tags for inline CSS properties. Inline CSS property are added by using the style attribute within specific HTML tags. Inline CSS properties unnecessarily increase page size, and can be moved to an external CSS stylesheet. Removing inline CSS properties can improve page loading time and make site maintenance easier.

<p style="color:gray;">Awesome Description </p>

Deprecated HTML

Check if your webpage is using old, deprecated HTML tags.These tags will eventually lose browser support and your web pages may render incorrectly as browsers drop support for these tags.

<!-- deprecated tag --> <applet code="Example.class" width="350" height="350"> Java applet that draws animated bubbles. </applet>

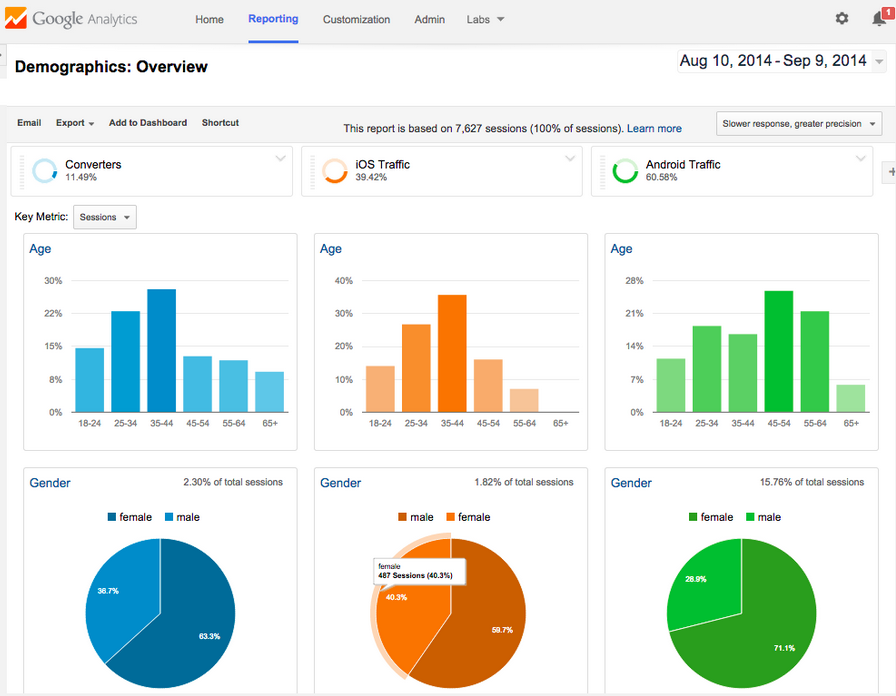

Google Analytics

Check if your website is connected with Google Analytics. Google Analytics is a popular, free website analysis tool that helps provide insights about your site’s traffic and demographics.

Favicon

Check if your site is using and correctly implementing a favicon. Favicons are small icons that appear in your browser’s URL navigation bar. They are also saved next to your URL's title when your page is bookmarked. This helps brand your site and make it easy for users to navigate to your site among a list of bookmarks.

![]()

Backlinks

Check to view the backlinks for your website. Backlinks are any links to your website from an external site. Relevant backlinks from authority sites are critical for higher search engine rankings. Our backlink checker also helps identify low-quality backlinks that can lead to search engine penalties for your website.

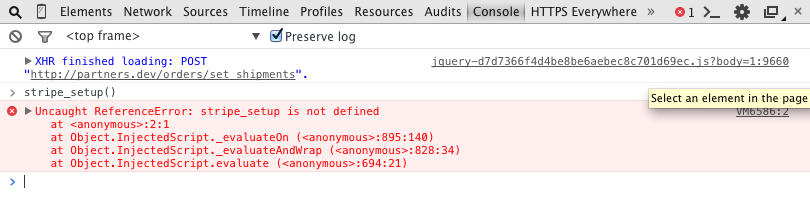

JS Errors

Check your page for JavaScript errors. These errors may prevent users from properly viewing your pages and impact their user experience. Sites with poor user experience tend to rank worse in search engine results.

Social Media

Check if your page is connected to one or more of the popular social networks. Social signals are become increasingly important as ranking factors for search engines to validate a site’s trustworthiness and authority.

Advanced SEO

Microdata Schema

Check if your website uses HTML Microdata specifications (or structured data markup). Search engines use microdata to better understand the content of your site and create rich snippets in search results (which helps increase click-through rate to your site).Your web page doesn’t take the advantages of HTML Microdata specifications in order to markup structured data. View Google’s guide for getting started with microdata.

<div itemscope itemtype="http://schema.org/Book"> <span itemprop="name"> Awesome Book </span> <span itemprop="author">Awesome Author</span> </div>

Noindex Tag

Check if your web page is using the noindex meta tag. Usage of this tag instructs search engines not to show your page in search results.

<meta name="robots" content="noindex, nofollow">

Canonical Tag

Check if your web page is using the canonical link tag. The canonical link tag is used to nominate a primary page when you have several pages with duplicate or very similar content.

<link rel="canonical" href="http://example.com/blog" />

Nofollow Tag

Check if your web page is using the nofollow meta tag. Outgoing links marked with this tag will tell search engines not to follow or crawl that particular link. Google recommends that nofollow tags are used for paid advertisements on your site and links to pages that have not been vetted as trusted sites (e.g., links posted by users of your site).<a href="http://www.example.com/" rel="nofollow">Link text</a>

Disallow Directive

Check if your robots.txt file is instructing search engine crawlers to avoid parts of your website.

The disallow directive is used in robots.txt to tell search engines not to crawl and index a file, page, or directory.our site lacks a “robots.txt” file. This file can protect private content from appearing online, save bandwidth, and lower load on your server. A missing “robots.txt” file also generates additional errors in your apache log whenever robots request one.

User-Agent: * Allow: /wp-content/uploads/ Disallow: /wp-content/plugins/ Disallow: /readme.html

SPF Records

Check if your DNS records contains an SPF record. SPF (Sender Policy Framework) records allow email systems to verify if a given mail server has been authorized to send mail on behalf of your domain. Creating an SPF record increases email delivery rates by reducing the likelihood of your email being marked as spam.

skype

Sign up! It's easy

LeadBI

The lead generation software that

uncovers your website visitors and turns them into leads.

COMPANY

RESOURCES

RSS

CONTACT US

LeadBI | Marketing Automation Made Simple

Dublin - Maple Avenue, Stillorgan Industrial Park